Cloud-native applications must deliver performance, reliability, and cost efficiency simultaneously. One of the most powerful enablers of this is elastic scaling, where computing resources automatically expand or contract based on real-time demand.

In this article, we’ll break down the technical foundations of elastic scaling (covering Auto-Scaling Groups, Kubernetes Horizontal Pod Autoscaler, Load Balancers, Metrics Servers, etc.), explain each technology in detail, and then apply it to Hotel/Resort, Restaurant, and Travel Agency Internal Management Systems.

Elastic Scaling: The Core Concept

Elastic scaling allows your application to adapt to workload fluctuations automatically. Instead of provisioning static infrastructure (which is either underutilized or overwhelmed), elastic scaling ensures the right resources are available at the right time.

There are two primary approaches:

- Vertical Scaling (adding more power to a single server).

- Horizontal Scaling (adding more servers/containers).

Elastic scaling is generally implemented through Auto-Scaling Groups (ASG) or Kubernetes Horizontal Pod Autoscaler (HPA).

New Technology Deep Dives

1. Load Balancer

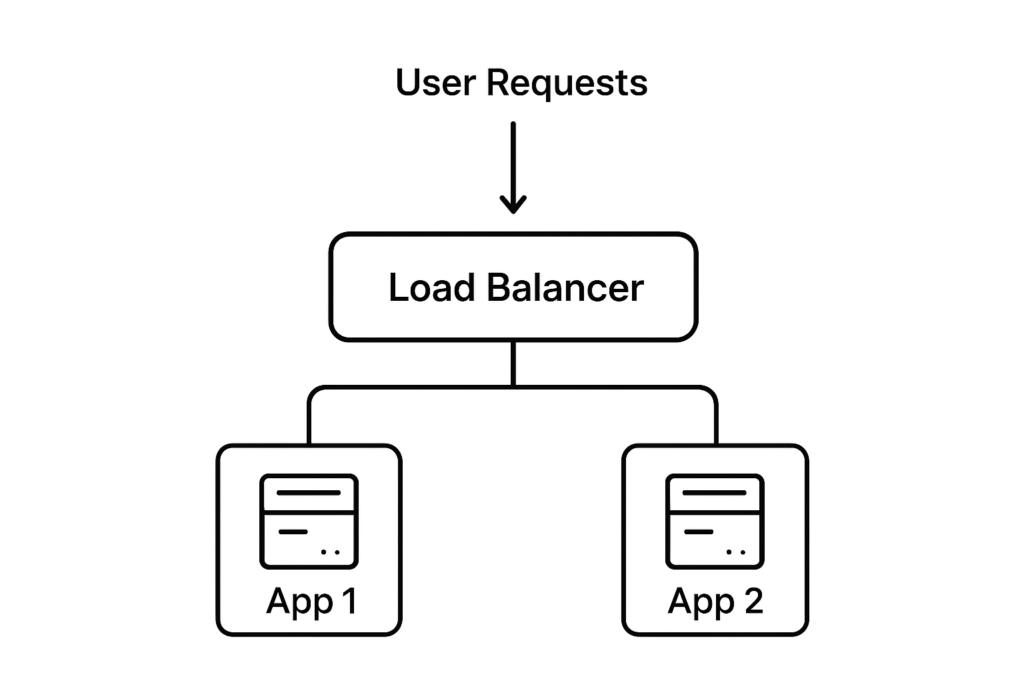

A Load Balancer is a networking component that distributes incoming traffic across multiple servers or containers.

- Why it matters for scaling: Without a load balancer, users might all hit a single server, overwhelming it. With it, scaling becomes seamless.

- Types:

- Layer 4 (Transport level) → balances based on IP/Port.

- Layer 7 (Application level) → balances based on HTTP headers, cookies, or content.

2. Auto-Scaling Groups (ASG)

An Auto-Scaling Group (AWS, Azure VM Scale Sets, GCP Managed Instance Groups) manages a set of identical servers.

- Key features:

- Launch new instances when CPU/memory > threshold.

- Terminate idle instances during low demand.

- Maintain minimum & maximum instance count.

3. Kubernetes Horizontal Pod Autoscaler (HPA)

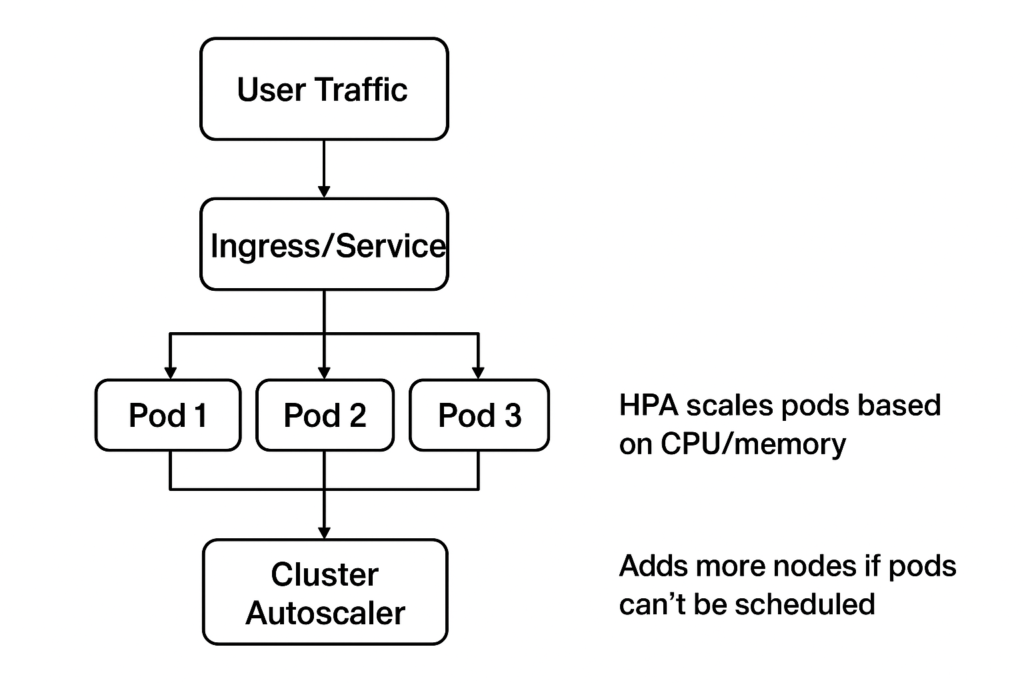

Kubernetes is the de-facto standard for container orchestration.

The HPA scales the number of pods (container instances) in a deployment based on real-time metrics.

4. Metrics Server

The Metrics Server is a Kubernetes component that collects real-time usage data (CPU, memory).

- HPA depends on this to make scaling decisions.

- It scrapes data from kubelets on worker nodes.

Without Metrics Server, HPA can’t function.

5. Message Queues (Kafka / RabbitMQ)

When scaling out, systems often need asynchronous processing.

- A Message Queue ensures requests are buffered and processed smoothly.

- Example: Travel agency package recommendations may take time → handled via queue.

Load Balancer in Depth

A Load Balancer (LB) is the “traffic cop” of a distributed application. Its job is to accept client requests and intelligently route them to backend servers or containers so that no single instance is overwhelmed.

Imagine your Hotel/Restaurant/Travel agency app has 10 web servers running. Without a load balancer, users would have to manually know which server to contact. With a load balancer:

- Users only see one public endpoint (like app.example.com).

- The LB decides where to forward each request.

- If one server fails, the LB removes it from the pool → ensuring high availability.

Why Load Balancers are Critical for Scaling

- Distributes workload evenly

Prevents one server from handling too many requests while others sit idle. - Enables Auto-Scaling

As new servers or pods are added (via ASG or Kubernetes HPA), the LB automatically routes traffic to them. - Handles Failover

If one instance crashes, traffic is shifted to healthy ones (detected via health checks). - Supports Session Persistence (Sticky Sessions)

Some apps (e.g., restaurant order tracking) need the same user to always land on the same server. LBs can enforce this via cookies/IP hashing.

Types of Load Balancers

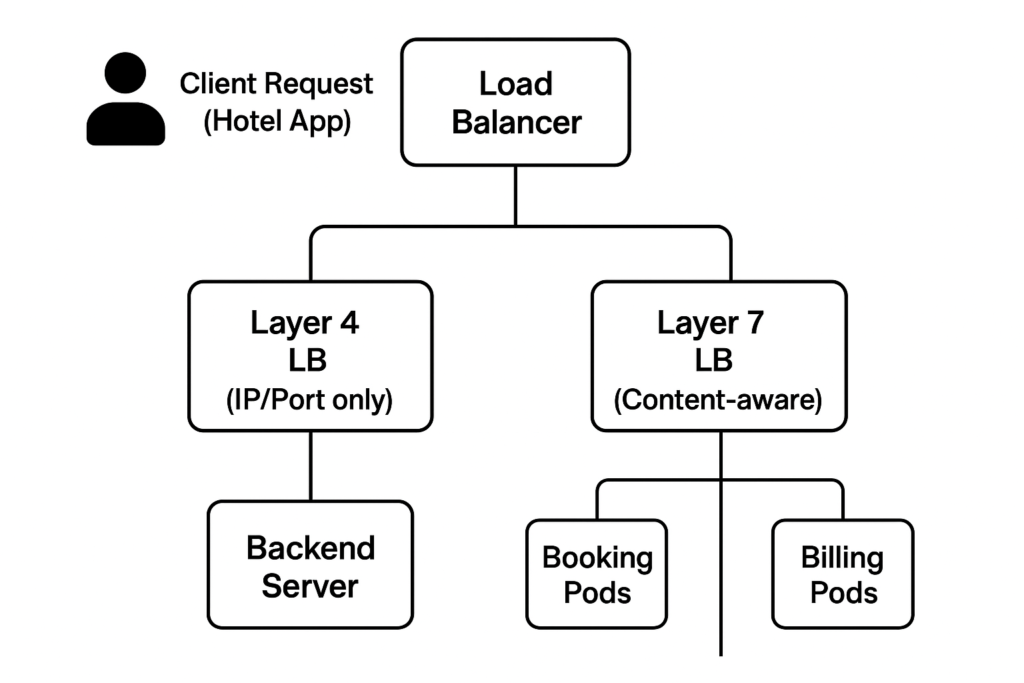

1. Layer 4 Load Balancer (Transport Layer)

- Works at the TCP/UDP level.

- Balances requests based on IP address and port.

- Does not inspect actual content (payload).

Example:

If your Travel Agency app gets 10,000 HTTPS requests, a Layer 4 LB will simply forward them evenly across backend servers based on port 443 traffic.

Pros: Fast, lightweight, low overhead.

Cons: Cannot make content-aware decisions (e.g., different rules for search vs payments).

2. Layer 7 Load Balancer (Application Layer)

- Works at the HTTP/HTTPS level.

- Can inspect headers, cookies, URLs, or even request body.

- Makes smarter routing decisions.

Examples:

- In a Hotel app: route /booking traffic to Booking pods, /staff to Staff pods.

- In a Restaurant app: forward /orders to Order Processing pods, /menu to Menu pods.

- In a Travel Agency app: send /search to Search pods, /payments to Payment pods.

Pros: Content-aware, enables microservices routing.

Cons: More CPU-intensive than L4.

Layer 4 LBs are best for raw speed and simple distribution.

Layer 7 LBs are best for intelligent routing in microservice-based apps.

Practical Cloud Examples

- AWS Elastic Load Balancer (ELB)

- Network Load Balancer (NLB) → Layer 4.

- Application Load Balancer (ALB) → Layer 7.

- NGINX / HAProxy (self-managed open-source load balancers).

- Kubernetes Ingress Controller → operates as an L7 load balancer for containers.

Auto-Scaling Group (ASG) in Depth

An Auto-Scaling Group is a cloud-native feature that manages a pool of identical compute resources (EC2 in AWS, VMSS in Azure, MIG in GCP). The group ensures your application always has the right number of servers running, depending on real-time demand.

How it Works

- Baseline Configuration

- You define a Launch Template/Configuration → OS image, instance type, network settings, etc.

- You set minimum, maximum, and desired instance counts.

- Monitoring Metrics

- Metrics (CPU %, memory usage, request count, queue length, etc.) are continuously tracked.

- These come from CloudWatch (AWS), Azure Monitor, or GCP Cloud Monitoring.

- Scaling Out (Up)

- When demand increases (e.g., CPU > 70% for 5 minutes), ASG launches new instances automatically.

- These join behind the Load Balancer, making scaling seamless to users.

- Scaling In (Down)

- During off-peak hours (e.g., CPU < 20% for 10 minutes), ASG terminates extra instances.

- This saves costs while still meeting performance guarantees.

- Self-Healing

- If an instance fails health checks, ASG automatically replaces it.

- This ensures resilience without manual intervention.

Example Scaling Policy

| Condition | Action | Result |

|---|---|---|

| CPU > 70% for 5 min | Add +1 instance | Scale out |

| CPU < 20% for 10 min | Remove -1 instance | Scale in |

| Instance unhealthy | Replace instance | Self-heal |

Why ASGs Matter

- Cost Efficiency → pay only for resources you need.

- Performance Stability → avoids server overload during peak traffic (e.g., hotel booking surge during holidays).

- Reliability → self-healing reduces downtime risk.

In practice,

- Hotel/Resort app: spikes during seasonal booking → scale up automatically.

- Restaurant app: heavy order load at lunch/dinner hours → ASG grows/shrinks daily.

- Travel Agency app: sudden bursts during promotions → scale out instantly, scale in after campaign ends.

Kubernetes: A Deeper Dive into Auto-Scaling

Kubernetes (often abbreviated as K8s) is an open-source container orchestration platform originally developed by Google. Its primary purpose is to automate deployment, scaling, and management of containerized applications. While Virtual Machines (VMs) require managing entire OS instances, Kubernetes focuses on containers, which are lightweight, portable, and faster to scale.

Core Kubernetes Components (Relevant to Scaling)

| Component | Role in Scaling |

|---|---|

| Pods | The smallest deployable unit in Kubernetes, usually wrapping one or more containers. Scaling means increasing/decreasing the number of pods. |

| ReplicaSet | Ensures a specified number of pod replicas are always running. Works with Deployments to manage pod scaling automatically. |

| Deployment | Declaratively manages application pods. When you request scaling, the Deployment updates the ReplicaSet to add/remove pods. |

| Cluster Autoscaler | Adjusts the size of the underlying cluster (nodes/VMs) based on workload demands. |

| Horizontal Pod Autoscaler (HPA) | Adjusts the number of pods in a deployment based on metrics like CPU, memory, or custom app metrics. |

| Vertical Pod Autoscaler (VPA) | Adjusts resources (CPU/memory) allocated to pods without changing the pod count. |

| Ingress + Service (Load Balancing) | Ensures that scaled pods are discoverable and receive traffic evenly. |

Kubernetes Scaling Mechanisms

- Horizontal Pod Autoscaling (HPA)

- Adds or removes pods based on metrics.

- Example: If average CPU usage exceeds 70%, Kubernetes spawns more pods.

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: webapp-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: webapp

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

This ensures your web app always runs within resource thresholds.

- Vertical Pod Autoscaling (VPA)

- Instead of scaling out (more pods), VPA scales up by adjusting pod resource requests.

- Useful for workloads that cannot easily be split into multiple pods.

- Cluster Autoscaling

- If the cluster itself doesn’t have enough resources (VMs/nodes), Kubernetes can request more nodes from the cloud provider.

- Integrates with AWS EC2 Auto-Scaling Groups, Azure VM Scale Sets, or GCP Managed Instance Groups.

- When workloads decrease, unused nodes can be terminated to save cost.

Kubernetes Scaling Workflow

Here’s what happens step by step:

- Metrics Collection → Kubernetes collects metrics (via Metrics Server, Prometheus, or custom adapters).

- Autoscaler Decision → HPA/VPA analyzes whether scaling is needed.

- Scaling Action → More pods are created (HPA) or pods get more resources (VPA).

- Load Balancing → The Kubernetes Service + Ingress Controller ensures traffic is evenly distributed to new pods.

- Cluster Adjustment → If node resources are exhausted, the Cluster Autoscaler spins up new cloud VMs automatically.

Why Kubernetes Scaling Matters

- Granular scaling → Scale pods within seconds instead of booting entire VMs.

- Cost optimization → Scale down unused pods/nodes when traffic is low.

- Resilience → If a pod crashes, Kubernetes automatically replaces it.

- Cloud-agnostic → Works the same on AWS, Azure, GCP, or even on-prem.

Service + Ingress Controller

A Service in Kubernetes provides a stable network endpoint to expose a group of Pods, ensuring reliable communication within or outside the cluster even if Pods are dynamically created or destroyed. An Ingress Controller, on the other hand, manages external access to Services, typically via HTTP/HTTPS, by acting as an intelligent entry point that routes traffic based on rules such as hostnames or paths.

Together, they form a powerful combination: the Service ensures consistent internal networking, while the Ingress Controller handles controlled and secure external traffic management.

The importance of Service and Ingress Controller lies in their ability to make applications highly accessible, scalable, and manageable. Without Services, Pods would be difficult to reach due to their ephemeral nature, and without Ingress Controllers, exposing applications externally would require managing multiple LoadBalancers or NodePorts, which is inefficient. By combining them, Kubernetes ensures seamless internal communication and efficient external traffic routing, reducing complexity, optimizing resource usage, and supporting advanced features like SSL termination, load balancing, and path-based routing.

Elastic Scaling Architectures

1. ASG-based (VM level)

Users → Load Balancer → Auto-Scaling Group (VMs)

Pros: Mature, supported in all clouds.

Cons: Slower to scale (VM boot time).

2. Kubernetes-based (Container level)

Users → Ingress → Services → Pods (scaled by HPA)

Pros: Faster scaling, fine-grained microservices.

Cons: More complex to manage.

Application in Hospitality & Travel Systems

1. Hotel / Resort Internal Management System

Workloads

- Guest reservations (high during weekends, holidays).

- API integrations with booking portals.

- Staff management (relatively constant).

Scaling Strategy

- Use ASG for front-end web servers.

- Use K8s HPA for reservation microservice.

- Queue (Kafka) for delayed billing/invoice jobs.

2. Restaurant Internal Management System

Workloads

- Food orders (peak lunch/dinner).

- Table reservations (steady).

- Kitchen staff dashboard (internal).

Scaling Strategy

- HPA for “Order Processing” pods.

- ASG for backend database read replicas.

- Scale down after hours → cost savings.

3. Travel Agency Internal Management System

Workloads

- Travel package search (spikes in holidays).

- Analytics for user recommendations.

- Payment gateways (consistent load).

Scaling Strategy

- HPA for Search API pods.

- ASG for analytics VMs (batch jobs).

- Queue for recommendation engine workloads.

| Application | Traffic Pattern | Scaling Benefit | Cost Efficiency |

|---|---|---|---|

| Hotel | Seasonal peaks | Keeps bookings live 24/7 | Avoids off-season overpaying |

| Restaurant | Meal-time spikes | Smooth ordering during rush | Scales down at night |

| Travel Agency | Holiday surges | Handles search/recommendations fast | No idle analytics servers |

Scaling Mapping

| Service | Scaling Tech | Trigger Metric |

|---|---|---|

| Search Service | HPA | CPU, request rate |

| Analytics Engine | ASG | Job queue length |

| Payment Gateway | Static HA | Always-on |

Elastic scaling via ASG + Kubernetes HPA is a technical backbone for cloud-native apps. For hospitality & travel systems:

- Hotels → smooth seasonal booking.

- Restaurants → reliable peak-hour orders.

- Travel Agencies → efficient package search & planning.

By dedicating scaling strategies per workload type, businesses achieve optimal user experience, cost efficiency, and resilience.